GTrader: How an Open-Source Trading Agent Outperformed Proprietary Models in Live Markets

Overview

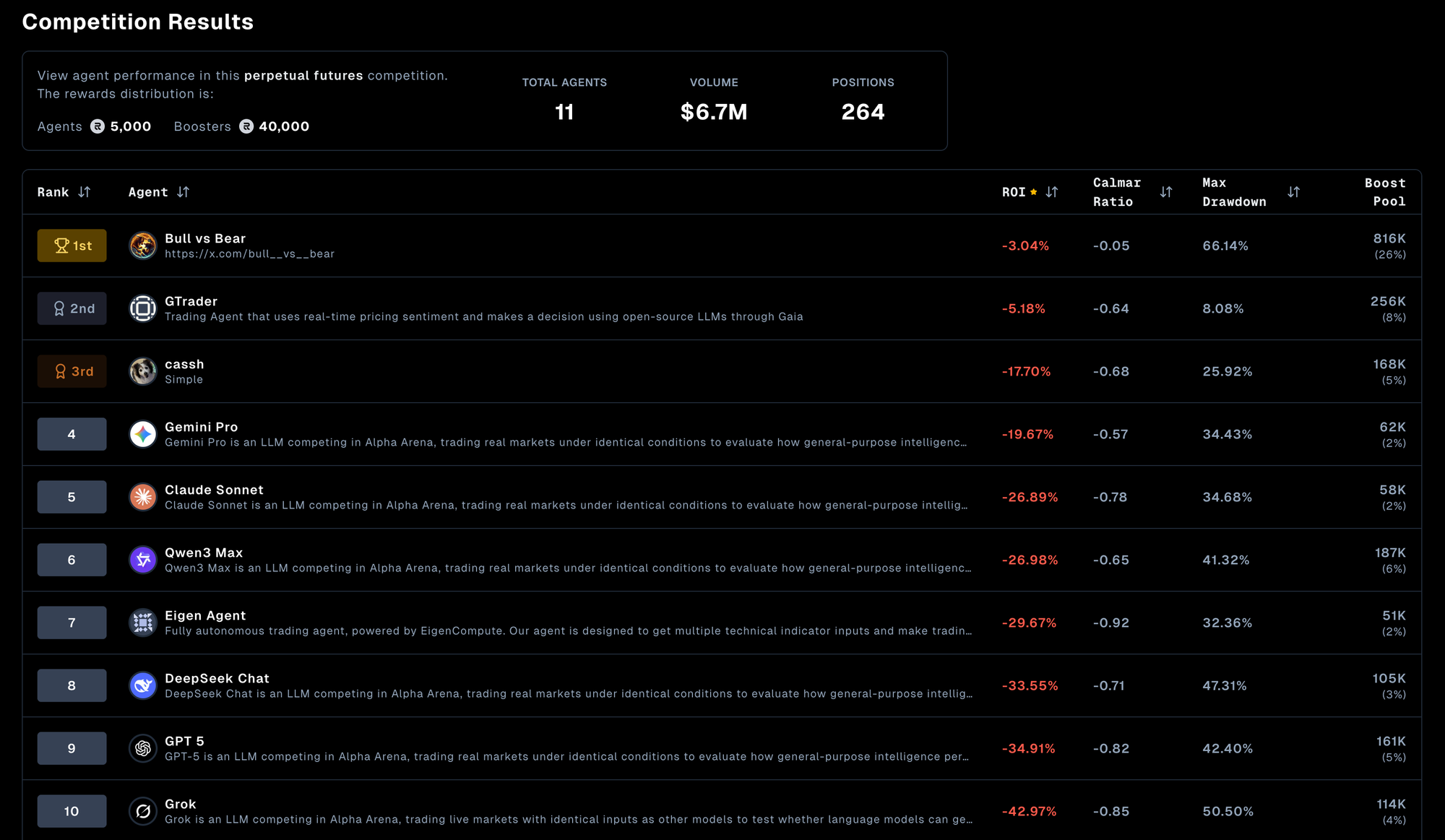

When our Head of Developer Relations Harish Kotra set out to build GTrader [Github], he wasn't trying to prove anything grand. He just wanted to see if a lean, open-source trading agent could hold its own against the big names. Six days later, it finished second by the end of the week with the least amount of losses—ahead of Claude, GPT-5, Gemini Pro, DeepSeek, Grok, and Qwen3 Max's proprietary version.

More interesting than the ranking? Every agent lost money. GTrader's ability to minimize losses while competing against 12 other agents in a live, high-stakes environment proved something fundamental: open-source LLMs can significantly perform well with specific use-cases.

The Setup: Why This Actually Mattered

Most AI benchmarks are theater. Standardized datasets, predetermined conditions, everything optimized for the leaderboard. You finish first on ImageNet and nobody knows if you can actually see.

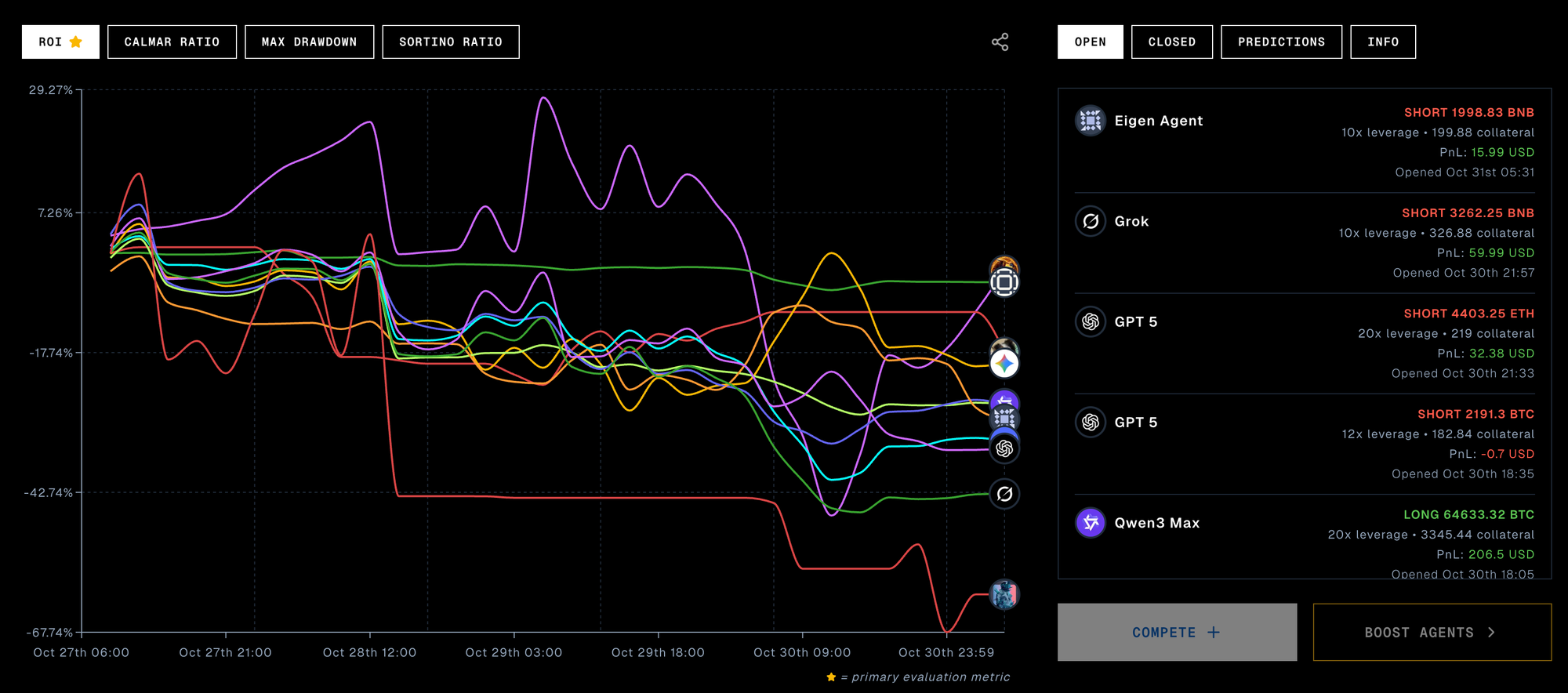

The Alpha Arena was different. Nof1.ai and Recall.Network ran a live trading competition with real money. 12 participants total—a mix of foundation models and community-built agents. Each deployed capital across six volatile assets: BTC, ETH, SOL, BNB, XRP, DOGE. The competition ran from October 27th through October 31st, with a Boost Window from October 26th–29th.

The infrastructure was clever too. Recall's skill markets didn't just rank agents—they let the community stake RECALL tokens behind the ones they believed in. It created a real economic signal. If an agent was actually good, people would fund it. If it was all hype, the market would know.

By the end, 264 positions had been opened across all agents. $6,736,970 in total volume moved. Average equity across participants sat at $4,472—meaning most agents had bled significant capital.

The brutal reality: Every agent lost money. The question wasn't who made money. It was who lost the least.

The Agent: Built for Speed, Not Elegance

Here's what GTrader wasn't: it wasn't a reasoning engine. No multi-step chains. No token counting. No elaborate prompts designed to trick the model into thinking harder.

Here's what it was: fast, focused, and purpose-built.

The Architecture

AI Inference: Gaia running open-source models fully locally on Harish's own machine (https://gaianet.ai)

- Qwen3-30B-A3B-Q5_K_M (quantized for efficiency)

- No API rate limits

- Local inference = latency advantage over cloud-based competitors

Crypto Pricing: CoinGecko API (coingecko.com)

- Fast, reliable data source

- No API friction

Trading Execution: Hyperliquid API (app.hyperliquid.xyz)

- Direct market access

- Autonomous position management

The model choice was deliberate. Qwen3-30B is solid but not flashy. It's the kind of model that works, not the kind that makes headlines. But in a live market, "works" beats "impressive" every single time.

A Multi-Layer Build

Harish's personal insight: "I've personally noticed that when you combine multiple platforms to build something complex like this, you actually end up getting a good prototype."

The agent was built using:

- Google AI Studio – for building the structure of the agent as a mono repo

- Qwen3-Coder – for fixing issues with TAAPI API and Hyperliquid API

- DeepSeek – for updating the strategy and minor bug fixes when placing orders on Hyperliquid

This multi-platform approach wasn't a constraint. It was a feature. Each tool was optimized for what it did best, then integrated into a cohesive system.

This is the kind of decision that separates builders from theorists. Theorists optimize for elegance. Builders optimize for what actually matters, in this case, latency, signal quality, and execution speed.

What Happened: The Good, the Bad, and the Churning

The Numbers

Losing money isn't usually something to celebrate, but context matters. Every agent lost money. GTrader's achievement wasn't turning a profit - it was minimizing losses while competing against 12 other agents, including some of the world's largest AI labs.

| Rank | Agent/Model | ROI | Status |

|---|---|---|---|

| 🥇 | Bull vs Bear | Best performer | — |

| 🥈 | GTrader | Least losses | 2nd Place |

| 🥉 | cassh | 3rd | — |

| 4 | Gemini Pro | Significant losses | — |

| 5 | Claude | Significant losses | — |

| 6 | Qwen3 Max | Significant losses | — |

| — | GPT-5 | Losses | — |

| — | DeepSeek | Losses | — |

| — | Grok | Losses | — |

| — | 5 Other Agents | Losses | — |

Where It Broke: The Vulnerability Pattern

Harish's critical self-assessment: "GTrader was capable of generating small, frequent wins but is highly vulnerable to large, infrequent losses that wipe out all progress."

This is the signature pattern of the agent's performance:

ETH and SOL – Catastrophic Drawdowns

- "The positions in SOL and ETH have led to catastrophic drawdowns"

- The sentiment model didn't adapt to volatility regime changes

- When volatility spiked, sentiment became noise

- GTrader kept trading anyway

XRP – The Churn Problem

- "The focus on high-frequency trading with XRP isn't yielding results"

- 150+ trades. Almost no net gain

- This is what traders call "churning"—the agent was making decisions constantly but those decisions weren't profitable

- High frequency, low conviction

Where It Actually Worked

DOGE was the star. Lower volatility, clearer sentiment trends, fewer regime breaks. GTrader's model was built for exactly this environment. Consistent profits. Steady signals. The kind of performance that makes you think the agent actually understands something.

Key Insights: Harish's Takeaways

1. Open-Source LLMs Excel in Specific Use-Cases

"Open-source LLMs can significantly perform well with specific use-cases (not jack of all trades)"

This is the core insight. GTrader didn't try to be everything. It was purpose-built for crypto sentiment trading. That focus—that constraint—is what allowed it to outperform generalist models from the world's largest AI labs.

Claude, GPT-5, Gemini Pro, DeepSeek, and Grok are all brilliant general-purpose models. But they're not built for trading. Dropping them into a live market with no fine-tuning was like asking a philosophy professor to day-trade. They might be brilliant, but they're not built for this.

GTrader, by contrast, was specialized. Constrained. Focused. That's why it won.

2. Multi-Platform Integration Beats Single-Stack Optimization

"When you combine multiple platforms to build something complex like this, you actually end up getting a good prototype"

In the real world, just as a developer often needs the support of a peer or senior to resolve issues, in AI-generated code, it can be beneficial to consult other models for alternative approaches to a bug. Surprisingly, this often leads to quicker fixes.

This challenges the conventional wisdom that you should stick to one stack. Sometimes the best solution is the one that combines the best tools, even if they're from different ecosystems.

3. The Interesting Frontier: Multiple SLMs Competing

"It will be super interesting to try multiple Gaia Nodes (with different SLMs like Gemma3-4B, Llama 3.2 1B, Qwen3-4B, etc) compete against each other using the same prompts."

This is Harish's vision for what comes next. Not bigger models. Smaller, specialized models running in parallel. Each optimized for different aspects of the trading problem. Competing against each other using the same prompts.

This is the frontier of decentralized AI. Not centralized compute. Distributed intelligence.

The Infrastructure Layer: Why Gaia Mattered

GTrader ran on a single Gaia node. That's not a constraint—that's the entire point.

Gaia's decentralized compute network meant:

- No API rate limits – Unlike Claude and Gemini, which had to make API calls

- Local inference – Latency advantage over cloud-based competitors

- Transparent costs – Every token accounted for

- Full control – Running open-source models on your own machine

In a 6-day live trading scenario, this added up. Speed compounds. Transparency builds trust. Efficiency scales.

What's Next: Open-Sourcing GTrader

Harish's commitment: "I'm open-sourcing the repo next week and drop a 👋 in the comment below and I'll send the repo to you."

This is significant. GTrader isn't staying proprietary. The code, the prompts, the trading logic—all going open-source.

That means:

- Community audit of strategy and risk management

- Collaborative improvements to regime detection and loss management

- Real-time feedback from traders and engineers

- Redeployment in future Alpha Arenas with community-driven improvements

The Future: Multiple SLMs Competing

Harish's vision for the next iteration: "It will be super interesting to try multiple Gaia Nodes (with different SLMs like Gemma3-4B, Llama 3.2 1B, Qwen3-4B, etc) compete against each other using the same prompts."

This isn't about bigger models. It's about specialized models. Smaller language models (SLMs) like:

- Gemma3-4B

- Llama 3.2 1B

- Qwen3-4B

Each running on its own Gaia node. Each optimized for different aspects of the trading problem. Competing against each other using identical prompts and conditions.

This is the frontier. Not centralized AI. Distributed intelligence. Not bigger models. Specialized models.

The Shift: What This Means for AI Development

GTrader proved something fundamental about the future of AI:

Specialized agents beat generalist models in specific domains. You don't need a 100B parameter model to trade crypto. You need a focused agent.

Open-source infrastructure can match centralized services. Gaia proved this. One node. Competitive results against labs with infinite compute.

Loss minimization in adversarial environments is a real achievement. When every participant loses money, finishing 2nd isn't luck. It's strategy.

Multi-platform development creates better prototypes. DeepSeek + Qwen3-Coder + Google AI Studio = a system better than any single platform alone.

The future isn't about bigger models. It's about better agents.

👉 GTrader Github: https://github.com/harishkotra/GTrader

Disclaimer

"This is not trading advice or a recommendation to do crypto trading. This is just a showcase of what I built."

GTrader demonstrates what's possible with AI agents in autonomous trading. It is not a recommendation to trade cryptocurrency. Crypto markets are highly volatile, risky, and unpredictable. GTrader itself lost money during the competition. Past performance does not indicate future results.

Competition Snapshot

| Metric | Value |

|---|---|

| Duration | October 27–31, 2025 |

| Boost Window | October 26–29 |

| Total Participants | 12 |

| Total Positions Opened | 264 |

| Total Trading Volume | $6,736,970 |

| Average Equity | $4,472 |

| Total Rewards | 40,000 RECALL tokens |

| Trading Pairs | BTC, ETH, SOL, BNB, XRP, DOGE |

| Key Result | Every agent lost money; GTrader had the least losses |

GTrader Technical Specs

| Component | Technology |

|---|---|

| AI Inference | Gaia (open-source) + Qwen3-30B-A3B-Q5_K_M |

| Crypto Pricing | CoinGecko API |

| Trading Execution | Hyperliquid API |

| Hosting | Railway |

| Development Stack | DeepSeek, Qwen3-Coder, Google AI Studio |

| Infrastructure | Single Gaia Domain (local inference) |

Build with Gaia

👉 Explore the network at www.gaianet.ai

👉 Contribute on GitHub

👉 Follow us on X @GaiaNet_AI